LLM Fail to Acquire Context

LLM Fail to Acquire Context

A Benchmark for Evaluating LLMs’ Ability to Request Missing Information in Math Problems

Codes: https://github.com/frinkleko/LLM-Fail-to-Acquire-Context

Xinjie Shen (xinjie@gatech.edu) Georgia Institute of Technology 11 June 2025; LinkedIn | GitHub | Twitter

Welcome to the first blog in the Fragile LLM series! In this post, we’ll explore an intriguing challenge faced by Large Language Models (LLMs):

tl;dr by Conrad Irwin: “Context omission: Models are bad at finding omitted context.” You may also be interested in severely unaligned LLMs in the physical world regarding their privacy understanding: Post and Paper.

Introduction

Large Language Models (LLMs) have transformed many fields with their impressive capabilities in understanding and generating language. However, a common frustration arises when LLMs provide mismatched implementations or make uncontrolled assumptions, leading to unexpected and undesirable results. This challenge often occurs when LLMs encounter incomplete or ambiguous inquiries, which users may unconsciously provide due to their interaction habits and expectations.

To investigate this, we’ve developed a benchmark to evaluate how well LLMs can identify missing context in math problems and actively seek out additional information. This benchmark focuses on mathematical problems, where precise conditions are essential for accurate solutions.

Benchmark

In recent years, the application of LLMs in solving mathematical problems has gained significant attention. Numerous datasets and specialized models have emerged, accompanied by rich, human-verified benchmarks. We adopt a high-quality, verified dataset collection to build our benchmark.

Data Preparation

We used the AM_0.5M dataset from Hugging Face as our starting point. This dataset contains a variety of math problems with verified answers. Our focus was on simple, easy-to-evaluate questions, which answer is often a single letter as choices (e.g., A, B, C, D) or a numeric value. This format simplifies the evaluation process and allows for straightforward comparison of LLM responses against ground truth answers. We extracted about 70,000 such easy <question, answer> pairs from the dataset. Benefiting from the dataset’s properties, we ensure that each question is solvable and has a verified answer.

We then use a system prompt (find it in appendix) to instruct the LLM to extract essential conditions or definitions from the questions and return the condition along with the modified incomplete question without the condition. Ideally, the new question should be unanswerable without the extracted condition.

An additional step of filtering was applied to ensure that the extracted conditions were indeed necessary for solving the modified question. This involved checking that the incomplete question could not be answered correctly without the extracted condition and potential leaking of information (remove cases where the incomplete question could still be answered correctly without the extracted condition by representative LLMs) . Finally, we sampled a subset of 2,686 entries from the processed dataset to form the benchmark.

Here’s an example:

{

"original_question": "Consider a function $f(x)$ defined over $\\mathbb{R}$ such that for any real number $x$, it satisfies $f(x) = f(x - 2) + 3$, and $f(2) = 4$. Find the value of $f(6)$.",

"condition": "f(x) = f(x - 2) + 3",

"incomplete_question": "Consider a function $f(x)$ defined over $\\mathbb{R}$ such that $f(2) = 4$. Find the value of $f(6)$.",

"answer": "10",

}

Key Properties

To this point, we have established a benchmark with the following key properties:

- Condition Extraction: Each question includes a critical condition necessary for solving the problem.

- Unsolvable without Condition: The modified question should not be answered correctly without the extracted condition.

- Ground Truth Answers: Each question has a verified answer for reliable evaluation.

Experiment

Overview

We aim to use this benchmark to answer the following research questions (RQs):

- RQ1: How often do LLMs ask for clarification, provide direct answers, or refuse to answer incomplete questions?

- RQ2: How does performance differ when LLMs ask for clarification versus when they have the full question?

- RQ3: How do the quality of questions and assumptions-as-hallucinations affect problem-solving ability?

Baselines

To help us obtain insights into LLMs’ behavior when faced with incomplete questions, we include most representative and state-of-the-art models such as GPT-4o, GPT-4o-mini, Gemini-2.5-pro-preview-03-25, and their variants with different prompts. For each model, we evaluate three different baselines:

- Raw: LLMs are only explicitly prompted with output formats.

- Questionable: LLMs are prompted explicitly with output and question formats. If LLM follows the question formats to ask questions, a user simulator will respond to that question. The tested LLMs can ask questions or answer within a maximum of 5 interactions.

The above two scenarios are tested with the incomplete question.

- Full Information: LLMs explicitly prompted with output formats. While the input question is the original question with the extracted condition included.

In the above baselines, one shot example is included in the prompt to help LLMs understand the expected output format or question format. The user simulator is designed to respond to questions based on the extracted conditions, simulating a human-like interaction. All prompts can be found in the Appendix section.

Here’s a brief illustration of our prompting format:

| Raw | Questionable | Full Information |

|---|---|---|

<think>...</think><answer>...\boxed{A}</answer> | <think>...</think>(<question>...</question>)(<answer>...\boxed{A}</answer>), () means optional, interaction ends when | Same as Raw |

All baselines are prompted to provide final answers in a specific format (\boxed{}), allowing us to identify when LLMs provide direct answers to incomplete questions. We detect and extract special question tags, to indicate that LLMs have asked for clarification.

RQ1: Response Behavior Pattern

In this section, we analyze facing the incomplete questions (Raw and Questionable baselines) and the full questions (Full Information baseline), how is LLMs’ response behavior?

It is easy to categorize LLMs’ response behavior with the following three patterns:

- Direct Answers: LLMs provide answers in the \boxed{} format, indicating they have attempted to answer the question directly.

- Answers without \boxed{}: LLMs provide content in answer tags but do not use the \boxed{} format to provide a final answer.

- No Answer Tags: LLMs do not provide any answer tags, which indicates there is issue in following the instruction of output format.

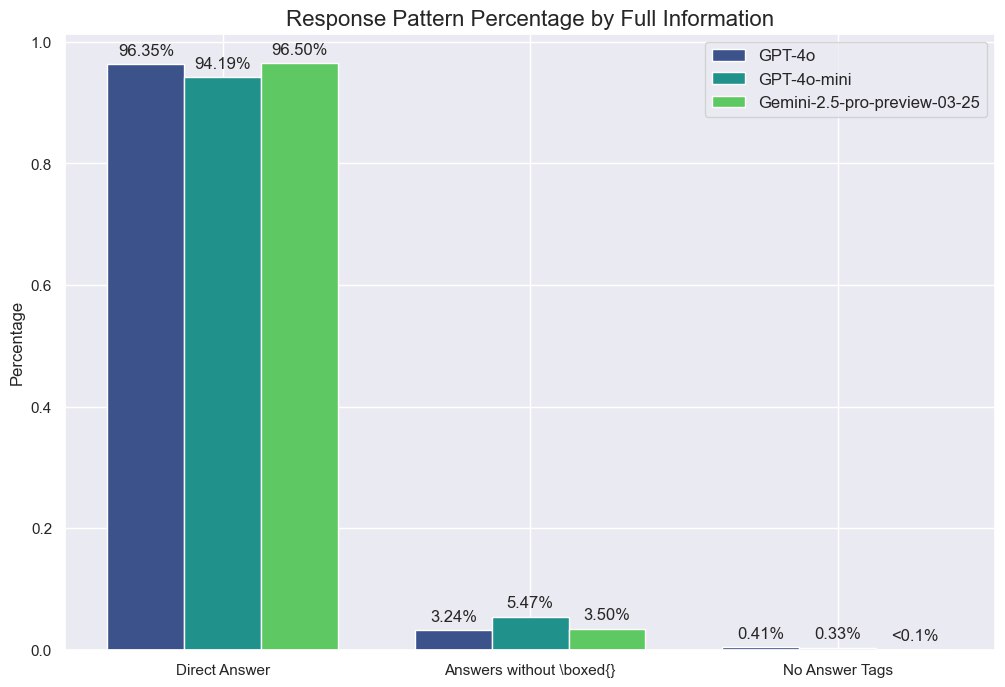

Full Information Baseline

Let’s start with the Full Information baseline, where LLMs are provided with the complete question, including the extracted condition. In this scenario, LLMs should ideally provide direct answers in the \boxed{} format.

In the figure, the pattern did follow our expectation, with a significant majority of responses being direct answers in the \boxed{} format. This indicates good instruction-following behavior and provides a reference for the following comparison.

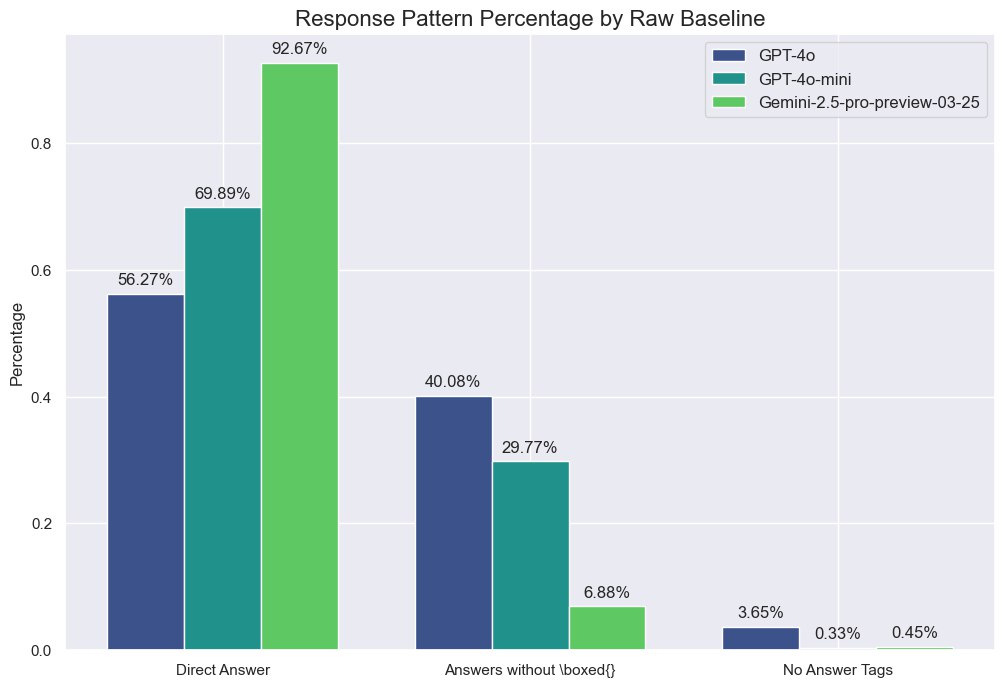

Raw Baseline

When it turns to the Raw baseline, where LLMs are only prompted with output formats, we observe a different pattern. The following figure shows the distribution of response behavior across different models:

Direct answers still dominate the responses across all models, especially in Gemini-2.5-pro-preview-03-25, where over 90% are direct answers. While GPT-4o-mini and GPT-4o show a significant portion of answers without the \boxed{} format, indicating that these models refused to provide an answer while generating contents or the answer is not included in \boxed{}.

We can also easily tell the response behavior patterns begin to vary across different models.

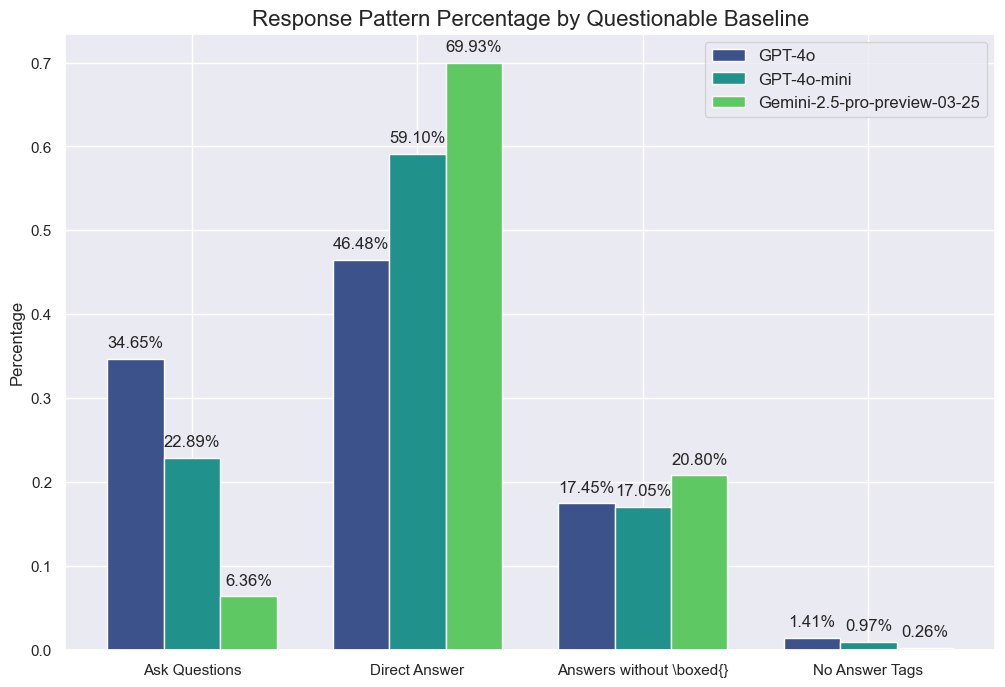

Questionable Baseline

In the Questionable baseline, LLMs are allowed to ask for clarification and interact with a user simulator, therefore we add the “ask questions” pattern, defined by tags, which would trigger interaction with the user simulator.

We could see that

- model tendency to ask questions is various while all significantly lower than the direct answer pattern, even facing such incomplete questions.

- The direct answer pattern is still the most common, but the percentage of answers without \boxed{} format has significantly increased facing the incomplete questions, which indicates LLM could identify the incompleteness of the question in some extent.

To summary with above observations, we can conclude that:

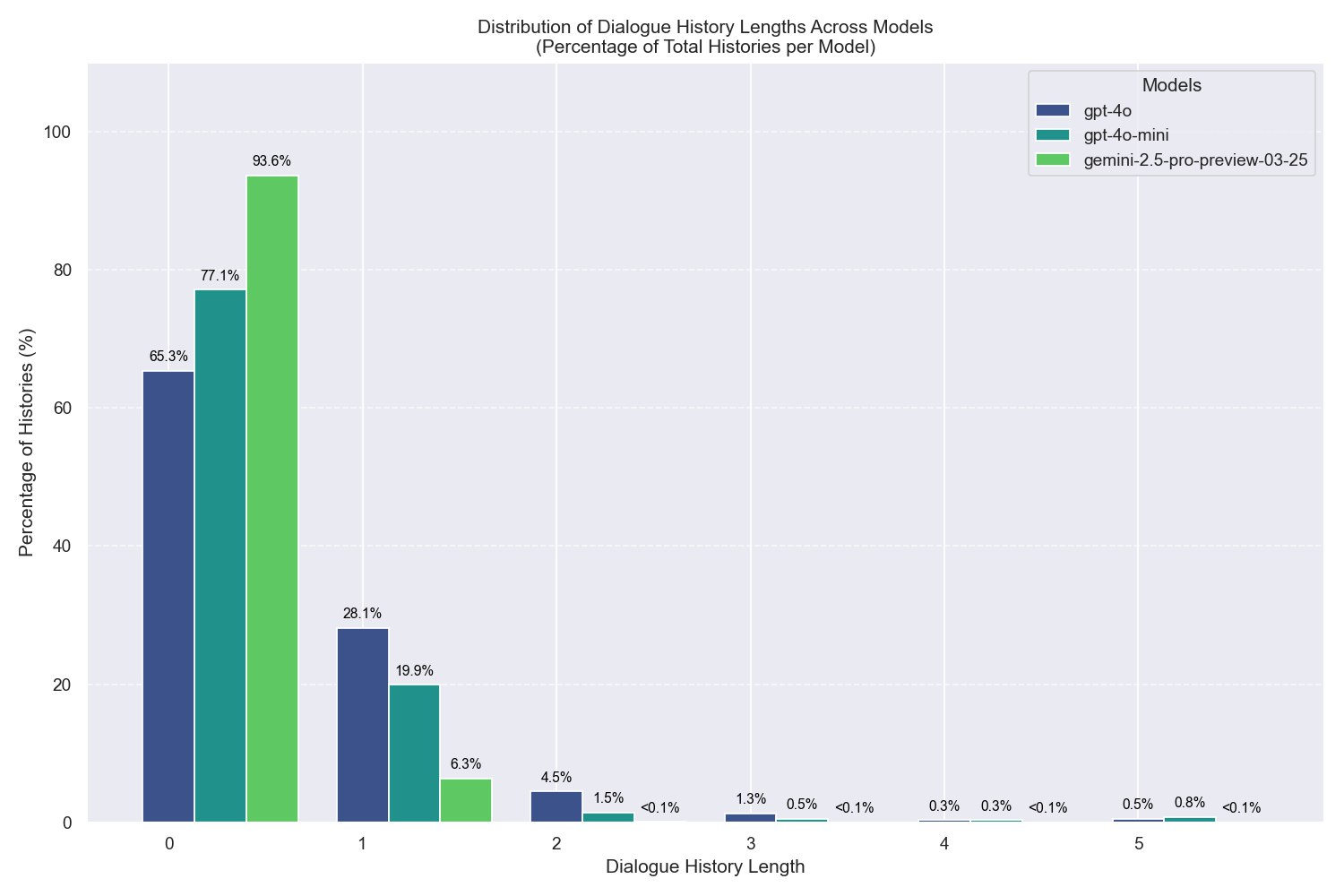

Let us further investigate the interaction turns between LLMs and the user simulator. We define the interaction turns as the number of interactions between LLMs and the user simulator, which is capped at 5 turns. The results are shown in the following figure:

It can be find that most of the interactions are within 2 turns, which indicates that LLMs seldomly ask many times of questions, potentially due to correct inquiry or passive questioning. We would investigate of the quality of the questions and condition acquisition in the following sections (RQ3).

RQ2: Performance Comparison

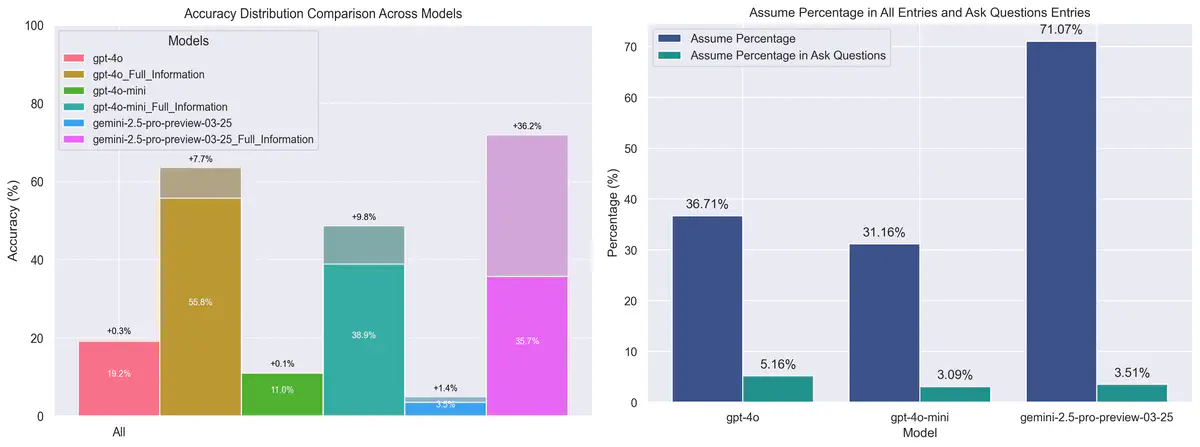

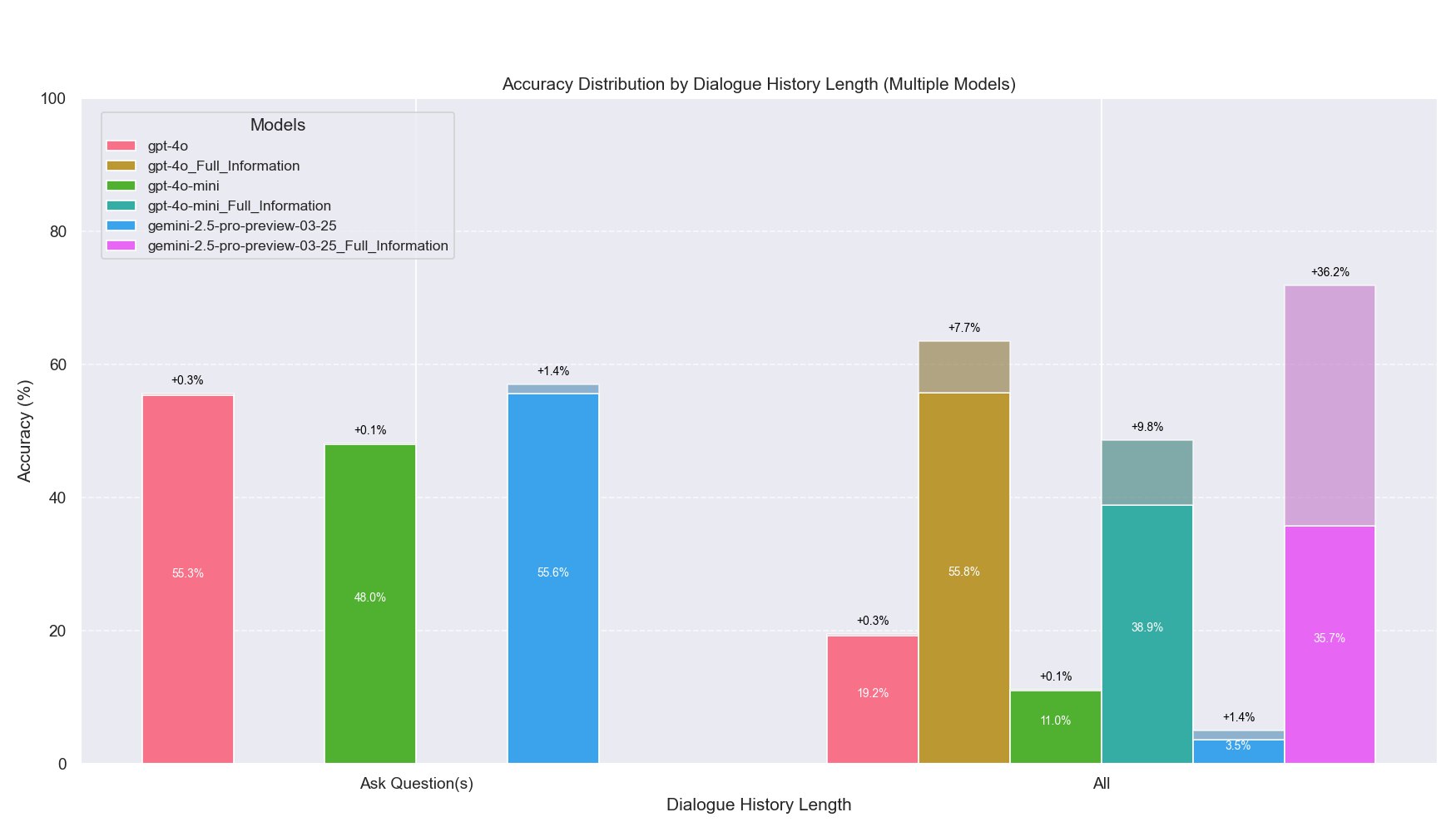

We present the absolute accuracy percentages of the Questionable Baseline when asking questions, compared to the Full Information Baseline. We allow for minor formatting errors such as do not following the tags as long as an answer is provided in the “boxed{}” format, indicated by the semi-transparent sections in the figures.

This easy to find

- The accuracy of the Questionable Baseline is significantly lower than the Full Information Baseline under all entries, which indicates that LLMs struggle to answer incomplete questions without the full context.

- Despite the lower accuracy of the Questionable Baseline when asking questions compared to the Full Information Baseline, the results are more comparable than the overall accuracy across all entries. Previous sections showed that LLMs often provide direct answers without asking questions. The gap between full information and question-asking accuracy suggests that LLMs may struggle to formulate high-quality questions and fully grasp the context. To investigate the questions quality, we will continue with RQ3.

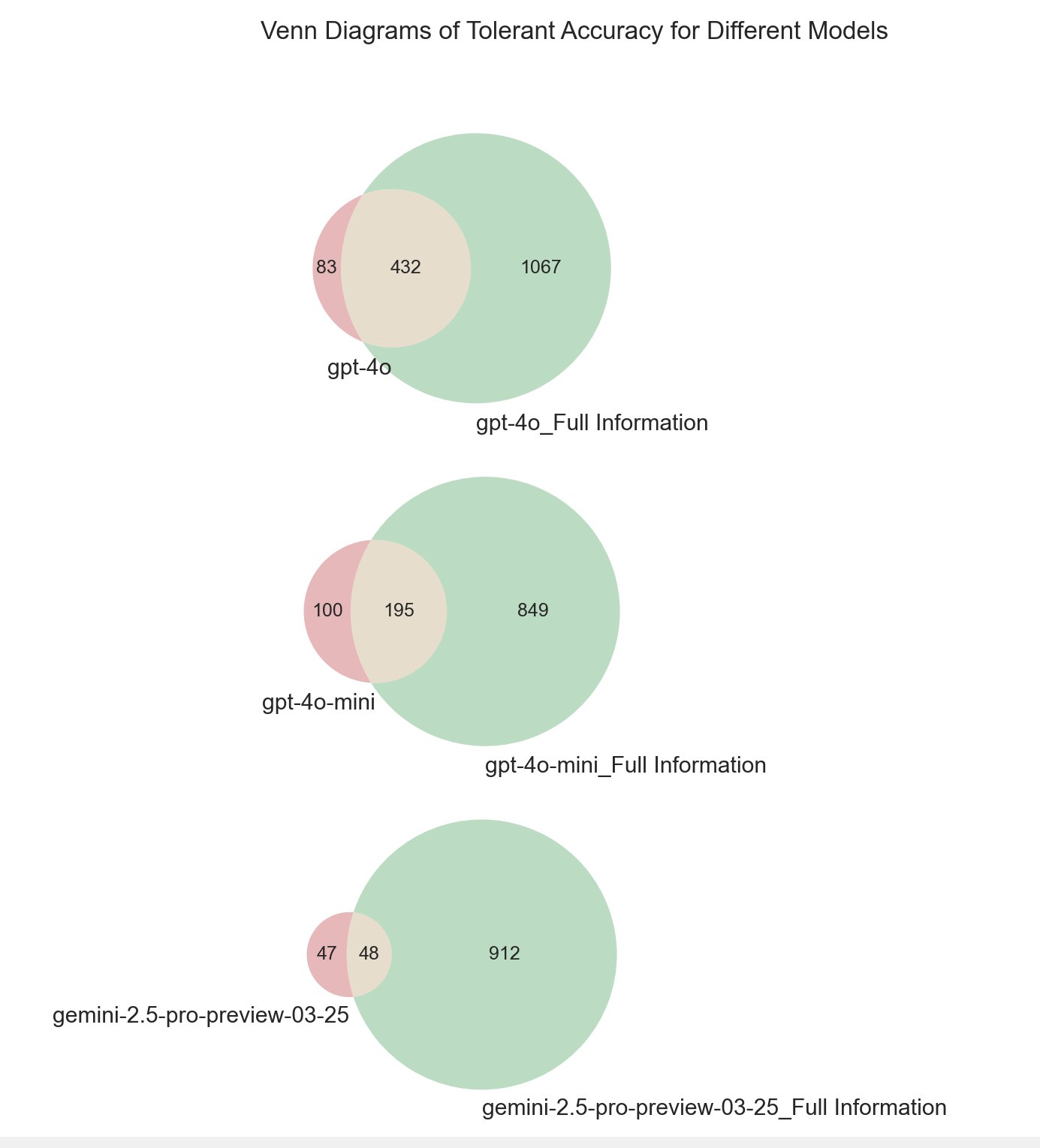

In the figures below, we analyze the Venn diagrams comparing the accuracy of question-answering between the Questionable Baseline and Full Information Baseline. Interestingly, there are a few instances where the Questionable Baseline correctly answers entries that the Full Information Baseline fails to. We believe this is due to LLM may recognize new acquired conditions from the user simulator with more attentions then plainly answering the question with the full information. We acknowledge that it may also be due to the inherent randomness in LLMs, while suggest that the amount is negligible and does not significantly affect the overall results.

RQ3: Question Quality and Assumptions

In RQ1, we presented the distribution of interaction turns. Now, we aim to investigate the quality of questions asked by LLMs in the Questionable, reflecting by how well LLMs acquire conditions from the user simulator. We will also analyze the assumptions made by LLMs, which can lead to hallucinations and errors.

Here we use an LLM judge to determine whether the LLM has correctly acquired conditions or made assumptions. The LLM judge is a separate model, GPT-4o. It helps to generate judgments on two aspects given the conversation history and ground truth conditions: 1) whether the LLM asks questions to acquire conditions from the user simulator, and 2) whether the LLM assumes any conditions not present in the input questions.

To ensure judgment accuracy, we sampled 100 entries and manually verified the alignment between human and LLM judgments. GPT-4o exhibited a limited error rate of <5%, while other models like GPT-4o-mini did not pass the alignment tests. The prompts used are detailed in the Appendix.

Our goal is to assess the quality of LLM-generated questions, particularly whether the model can acquire conditions from the user simulator.

| Model | gpt-4o | gpt-4o-mini | gemini-2.5-pro-preview-03-25 |

|---|---|---|---|

| Acquire Percentage | 91.51% | 91.71% | 97.08% |

The table above shows the percentage of questions where LLMs successfully acquired the condition.

The gap between the Questionable Baseline’s asks questions accuracy and the Full Information Baseline’s accuracy can be partially attributed to failure in acquiring conditions based on this analysis.

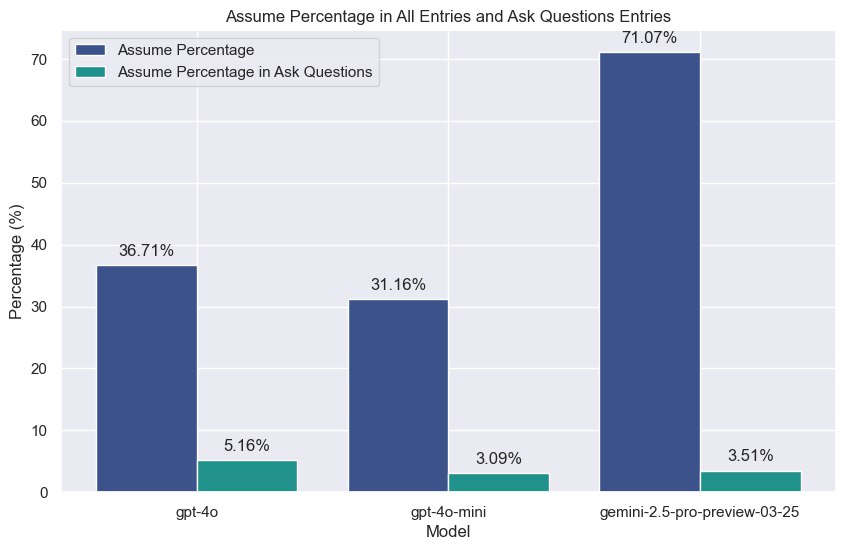

Furthermore, we’d like to explain the failure cases related to assumptions. In practice, we noticed that LLMs often make assumptions, such patterns continue facing incomplete questions. With the help of the LLM judge, we can analyze the assumptions made by LLMs in the Questionable Baseline. The following figure shows the distribution of assumptions made by LLMs across different models, in all cases and in cases where LLMs ask questions.

It can be found that the assumption percentage is significantly lower when LLMs ask questions compared to all cases. Meanwhile, there is a surprisingly high assumption percentage in all cases, especially for Gemini-2.5-pro-preview-03-25, where the assumption percentage is over 70%.

We then discuss about the accuracy when LLMs make assumptions without asking questions. It can be found, as long as the LLMs make assumptions without asking questions, the accuracy is 0.

| Model | gpt-4o | gpt-4o-mini | gemini-2.5-pro-preview-03-25 |

|---|---|---|---|

| Accuracy in Assume Without Questions Entries | 0 in 938 | 0 in 818 | 0 in 1903 |

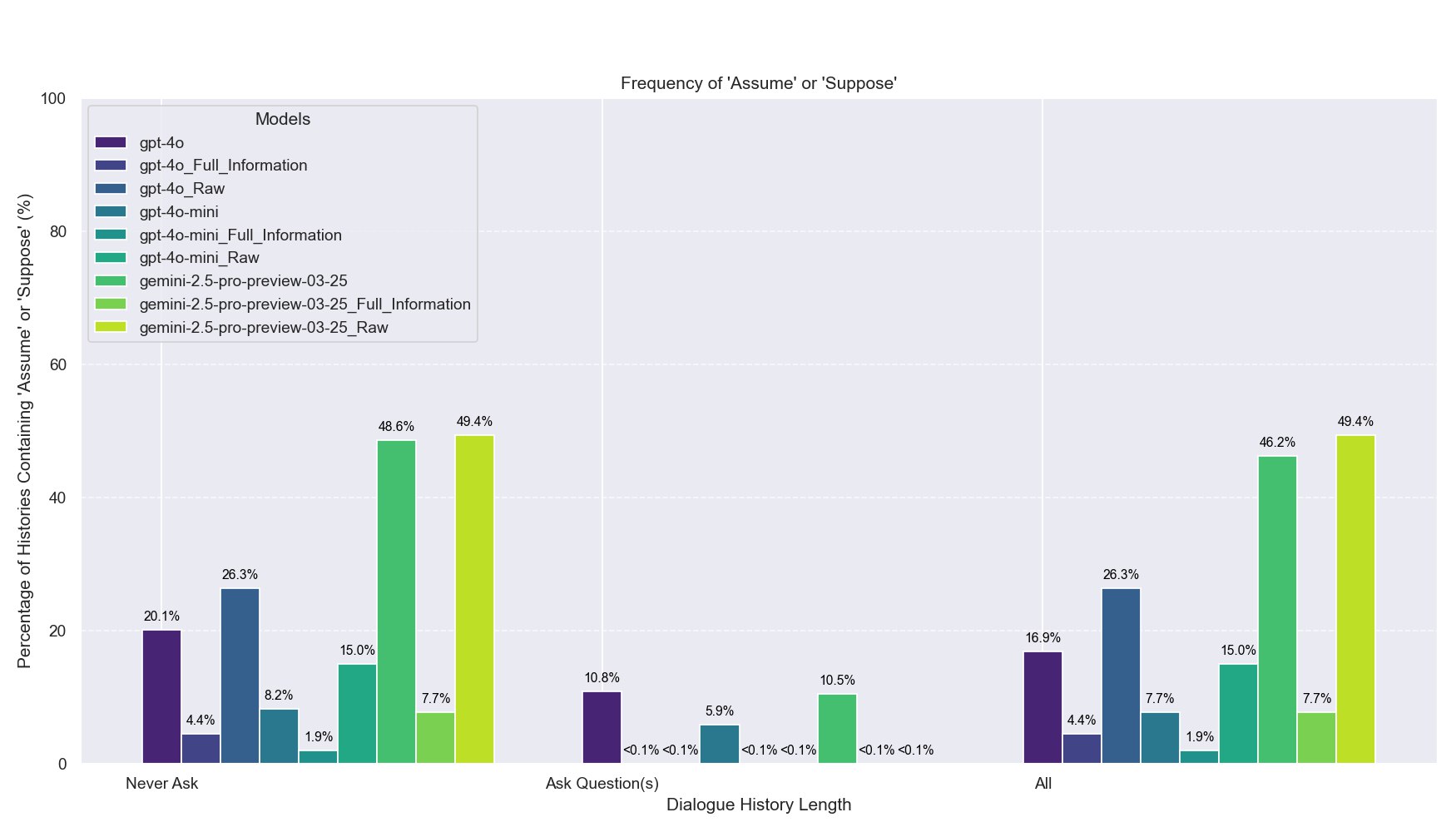

We also include a simple token level percentage of ‘assume’ or ‘suppose’ in the LLMs’ responses, as reference for the judgers’ judgment. The following figure shows the distribution of such tokens across different models.

This figure has shown similar patterns to above assumption analysis. It’s noticeable that by only detecting the token appearance, some case may be misclassified as assumptions (for example, intermediate variable definitions), while the LLM judge can help to filter out such cases.

Limitations

Domain Specificity

One obvious limitation of this benchmark is that it is only focused on mathematical problems, which may not generalize to other domains, even though the underlying issue and intuition is consistent across various tasks.

Model Variability

Another limitation is the variability in model performance. Different LLMs may exhibit varying degrees of effectiveness facing such incomplete questions. Specially, we only tested representative reasoning models, while they are usually considered to be better at handling complex reasoning tasks. We also guess, reasoning models may be more “confident” in their assumptions, leading to more hallucinations-as-assumptions, which shows great curiosity in further investigation of reasoning models vs non-reasoning models in this benchmark. Similarly, due to the costs of running LLMs, we only tested a limited number of models.

Future Work

We are very interested in extending this benchmark to address the limitations mentioned above and release a formal academic paper in the future. We believe that the insights gained from this benchmark can be valuable for improving LLMs’ interaction capabilities.

Takeaway

Again,

Conclusion

This benchmark highlights the potential for LLMs to improve their interaction and problem-solving capabilities by better handling incomplete information. By focusing on context acquisition, we can enhance LLMs’ effectiveness in academic and practical settings.

Actively seeking internship and industry collaboration opportunities. Contact me

Cite

If you find this blog or our codebase useful, please consider citing:

@misc{shen2025llmfail,

title={LLM Fail to Acquire Context},

author={Shen, Xinjie},

year={2025},

howpublished={\url{[https://sunset-jupiter-cf3.notion.site/Failing-to-Acquire-Context-A-Benchmark-for-Evaluating-LLMs-Ability-to-Request-Missing-Informatio](https://oatllm.notion.site/oat-zero)n--20fb7e977237802ca126ed554ccb8083}},

note={Notion Blog},

}

Appendix

Prompts

Extraction Prompt

You are a helpful assistant. You will receive a question and you should extract condition or definition from the question, and return the condition and question without the condition in json. The new question should be smooth and natural but just remove the condition. If there is no obvious condition, set the condition to be an empty string, and the new question to be the original question. The condition should be a string (if there are multiple conditions, only pick one), and the new question should be a string. The output should follows the format in the example below.

For example, question is "Let the set $A=\{1,2,3\}$, and the set $B=\{0,1,2\}$. Then, the number of elements in $A\cup B$ is $(\quad)$.\n\nA: \$2$\n\nB: \$3$\n\nC: \$4$\n\nD: \$5$", you should return {"condition": "$A=\{1,2,3\}$", "new_question": "Let the set $B=\{0,1,2\}$. Then, the number of elements in $A\cup B$ is $(\quad)$.\n\nA: \$2$\n\nB: \$3$\n\nC: \$4$\n\nD: \$5$"}

Raw Prompt

You are a helpful assistant. Your output format should be <think></think><answer></answer>.Return your final answer in /boxed{} format. Your answer should be included in <answer> tag.

For example, if the question is "What is 1+1?", you could return <think> I need to calculate 1+1. A: 2 B:3 C:5 D:8 </think><answer> I think the answer is /boxed{A}.</answer>.

Questionable Prompt

You are a helpful assistant. Your output format should be <think></think><question></question><answer></answer>, the question and answer part are optional. If you have include <answer> tag, question will be ignored. Return your final answer in /boxed{} format. Your answer should be included in <answer> tag.

For example, if the input question is "What is 1+1?", you could return <think> I need to calculate 1+1. A: 2 B:3 C:5 D:8 </think><answer> I think the answer is /boxed{A}.</answer> to answer it directly. If the question is "What is the capital of France?", you could return <think> I need to find the capital of France. </think><question> What is the capital of France? </question> if you may have question.

Full Question Prompt

The prompt is the same as the raw prompt, but the input question is the full question with condition.

User Simulator Prompt

You are a user simulator. You only answer questions using your information (provided below) if the question is related, for example, ask about definition, explanation or clarification of the information you have. If the question is not relevant to the condition, you can answer it freely, as long as it is not contradict to our information. Your information is: {condition}.

Judger Prompt

You are an AI assistant that evaluates the dialogue history. The dialogue history is consisted of the conversation between the user and the LLM.

Your task is to determine

1. if the LLM successfully acquired the condition from the user's answer to LLM's question, given the ground truth condition and the dialogue history.

2. if the LLM assumed or supposed any condition, which is not from the init question.

Ground Truth Condition: {condition}

Dialogue History: {dialogue_history}

Response Format should be a comma-separated list of three integers, which is in the format of <x,y,z>, example:

<0,0> # 1 means True, 0 means False

Here is an example that helps you understand.

Ground Truth Condition: 1000 employees, among which: senior managers account for 5%, middle managers account for 15%, and general staff account for 80%

Dialogue History:

{{

"role": "user",

"content": "In order to understand a certain situation of the company, a stratified sampling method is used to survey 120 people. Therefore, the number of general staff to be sampled is______."}}

{{

"role": "assistant",

"content": "<think>To determine the number of general staff to be sampled, I need to know the proportion of general staff within the company. Without this information, I cannot calculate the exact number. If the proportion of general staff is given, I would multiply that proportion by 120 to find the number of general staff to sample. </think><question>What is the proportion of general staff in the company?</question>"

}},

{{

"role": "user",

"content": "The proportion of general staff in the company is 80%."

}},

{{

"role": "assistant",

"content": "<think> Since the proportion of general staff in the company is 80%, and the total sample size is 120 people, I need to calculate 80% of 120. </think><answer> The number of general staff to be sampled is 0.80 \\times 120 = /boxed{{96}}. </answer>"

}}

Your Response:

<1,0>